Tube strikes are an unavoidable part of the London life for better or worse, and back on Monday 9th of January 2017 most Zone 1 Tube stations were closed due to strike action. For those who used the underground as a part of their daily commute this undoubtedly led to issues with getting into work, with many commuters being forced to give up what was left of their personal space as they crammed onto replacement buses.

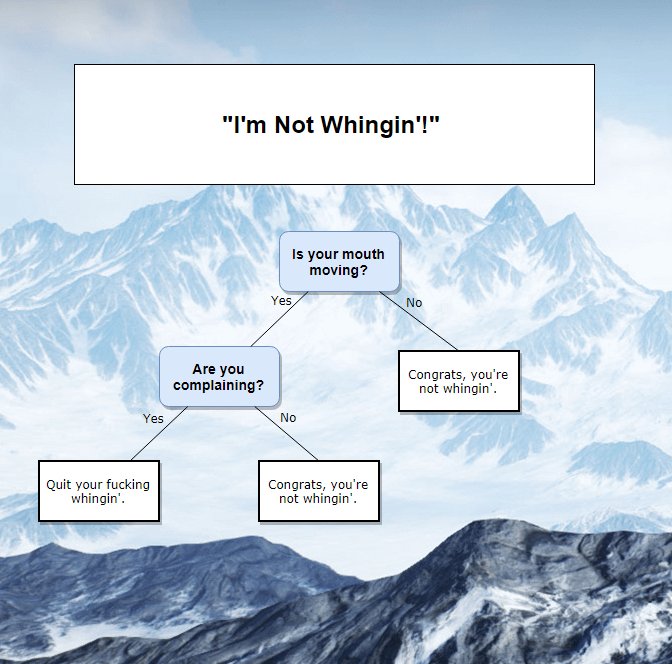

Now it doesn’t take a genius to figure that this would make at least some of the London populace angry, but just how angry were they as a whole? To find out we turned to Twitter, the world #1 whinging platform.

Twitter offers a very nifty API for gathering tweets which includes filters for hashtags, time periods, and even location, this makes our job of grabbing ‘relevant’ tweets much easier. We collected a huge dataset of tweets (460,000 give or take) of tweets from London on the day of the strike (9th of January) and another huge dataset of tweets from the 5th of January to give us a baseline (one could imagine Londoners might just be pretty angry in general).

So having collected our massive dataset(s), how do we find out whether people are angry or not without having to resort to reading through the tweets one by one? We could always leverage one of those fancy machine learning algorithms to help with that task, namely a classifier, but for that we needed yet another dataset, though this time one with the answers (i.e. labels).

We again turned to the Twitter API and collected more tweets, though this time ensuring we knew which tweets were angry and which weren’t. Taking a pretty simple approach:

- How do you know if a tweet is angry? Well, #Angry is a good start.

- How do you know if a tweet is not angry? Well, #Happy will probably do.

- For this training dataset, we decided to collect 100,000 tweets of each kind.

So we now have three datasets:

- HappyTweets

- AngryTweets

- TubeStrikeTweets

Now tweets are an example of what’s known in the business as unstructured data, it’s raw, dirty, and untabulated which can make it a tricky sort of data to try and use. To get it into a more usable form for working with, we had to carry out a few preprocessing steps first. Let’s start with the training data: AngryTweets and HappyTweets

- We load the data from the .csv file line by line, where each line has an ID, Date, Username, language and the tweet itself.

- The tweet is tokenized (split the text by space, so “DUCK THE TUBE” (sorry, autocorrect) becomes [“DUCK”, “THE”, “TUBE”]) and each word is cleaned:

- All words are made lowercase

- Remove trailing and leading spaces

- Remove symbols and punctuations like !ӣ$%^&*() etc.

- URLs are given a special token to identify someone posted a link.

- Words are also stemmed, essentially transforming them into their base root into something like this:

- Pythoning -> Python

- Pythoner -> Python

- Pythonista -> Python

- Python -> Python etc.

- When tokenizing tweets we also build up a dictionary of unique words and how often they appear, something like this:

- London: 500

- Tube: 300

- Teatime: 15,000

- For the training dataset we also store the label, i.e. whether the tweet was angry or not:

- Angry or Happy, depending on which dataset it comes from.

- This frames the problem as a binary classification problem.

The way in which the final data was represented was something like this:

| this | is | a | fake | tweet | I | hate | love | strikes | Angry? |

|---|---|---|---|---|---|---|---|---|---|

| 2 | 2 | 3 | 1 | 1 | 1 | 1 | 0 | 1 | 1 |

| 1 | 2 | 0 | 0 | 0 | 1 | 0 | 1 | 1 | 0 |

| 1 | 0 | 2 | 0 | 0 | 1 | 1 | 0 | 0 | 1 |

Where each word represents a separate feature, the value is the frequency of the word for a given tweet and the final column indicates the label of the tweet.

Now that we had our data in this slightly nicer format we could use it to train a classifier. Now there are a variety of classifiers you can use, each with their own advantages and disadvantages, and it can be a long process to select and tune the optimal model. With that in mind, we opted for a linear-SVM because why not. SVMs are pretty powerful as far as machine learning algorithms go, allowing for perhaps a smarter decision boundary than that offered by an alternative such as logistic regression.

It’s all good having a model, but we also needed a way of saying how good said model was. A simple metric such as accuracy was good enough to run with, considering we had fairly good control on the number of examples in each class.

We trained a linear-SVM using 10-fold cross-validation, this helped to prevent a dire problem in any modelling exercise, overfitting. Without going into too much detail, overfitting is when your model basically memorises your training data which is great if that’s all you are ever working with but terrible when you encounter something new. One good control for this is to use regularisation, which adds a penalty to overly complex models (i.e. ones that have likely overfit). We tuned the strength of our regularisation using the aforementioned cross-validation, which let us compare the model performance and pick the best of the bunch.

Finally, we could test the performance of the model on a completely separate labelled dataset (basically one that wasn’t used in training). Applying our model to this withheld dataset we ended up with a fairly respectable Accuracy of 0.94, which doesn’t seem so bad given the task. Confident that our model wouldn’t do a completely awful job we fed in the preprocessed tweets from the day of the strike to our trained model and collected the results, hopefully giving us some idea of just how angry these poor Londoners were.

Ignoring the obvious issues in directly comparing tweets from the day of the strike to tweets from another day, plotting the proportion of angry tweets for the two days doesn’t really show too much of a difference. Based on this very simple look at the data it’s hard to come to any strong conclusion but I think it’s safe to say on average, Londoners are more peeved than not, at least when it comes to Twitter.

Auto Amazon Links: No products found.